What Happens After BGP?

Gaining Full Path Visibility in Hybrid Cloud Networks In the modern enterprise, Border Gateway Protocol (BGP) plays a foundational role in connecting distributed networks. It is the routing protocol that...

Join us at NetBrain LIVE 2025 – Learn, Connect, Grow!

by Nov 27, 2018

Managing federal IT infrastructure is already a high-stakes balancing act. Navigating strict government mandates adds another layer of complexity — one that traditional, manual network operations were never built to handle. Many network teams have relied on brute force to keep services running for years, often focused on task execution rather than long-term strategy. This approach leaves little room for innovation, explaining the reason behind the slow adoption of automation across many environments.

But the landscape is shifting. Federal IT requirements mandated by the Cybersecurity and Infrastructure Security Agency (CISA) are becoming more stringent. Meeting them without automation is difficult and unrealistic. Government network automation, especially through no-code platforms, offers a scalable, efficient way to meet compliance goals while supporting daily operations. It reduces manual effort, improves accuracy, and frees engineers to focus on higher-value work.

The federal government IT sector can be difficult to navigate — the sheer number of security measures, compliance standards, industry, and workforce practices make it stand apart from many other industries. Networks that serve federal agencies are experiencing an objectively more severe amount of growing pains when compared to other industries because they were traditionally locked-down environments where changes happened infrequently.

As Federal government IT operators come under increased pressure to modernize, it becomes clear that much of their infrastructure isn’t prepared to enter the modern age with them. For example, the Pentagon failed its first audit, with many auditors noting issues with compliance, cybersecurity policies, and improving inventory accuracy.

With this in mind, it’s important to understand the elements that make the Federal government’s IT sector different.

Here are a few use cases that benefit from our intent-based network automation platform.

Network assessment has always been a goal for IT leaders, but it isn’t easy to implement. Due to its complexity, private sector assessments occur infrequently, around once every 3 years. Due to clearances and data sensitivity, it happens even less in the government sector.

While the Federal Civilian Executive Branch (FCEB) agencies largely ignored this best practice, in 2022, the agency issued CISCO 23-01 with the following requirements:

Meeting this requirement demands network automation, especially if you have thousands of assets in your preview. Verifying their existence and anomalies every seven days is impossible without network automation.

NetBrain is the answer for CISCO 23-01. NetBrain allows fully automated discovery and verification as often as you like. It identifies devices that should be on the network but are missing and devices that are on the network but should not be. NetBrain then goes much deeper by establishing the flow of data between services and applications and identifying changes from the expected behaviors in real time.

We recently looked at a finance-related project, looking at both the legacy and refresh environments and determining the security posture of both. It was a straightforward financial application, but they needed to hit a number of Service Level Agreements (SLAs) that the old equipment wasn’t properly equipped to provide. To make things worse, much of their new equipment had to be set into FIPS-CC compliance mode, inadvertently wiping the security settings on their refresh environment clean.

Over the course of six weeks, we evaluated individual config files, compared trade-offs between hardening certain systems vs meeting SLAs, and made sure the system as a whole was as secure as the one that came before it. Ultimately, this resulted in a lot of manual work and documentation, as older legacy systems were pulled apart and examined in order to help recreate golden configuration files for the newer systems.

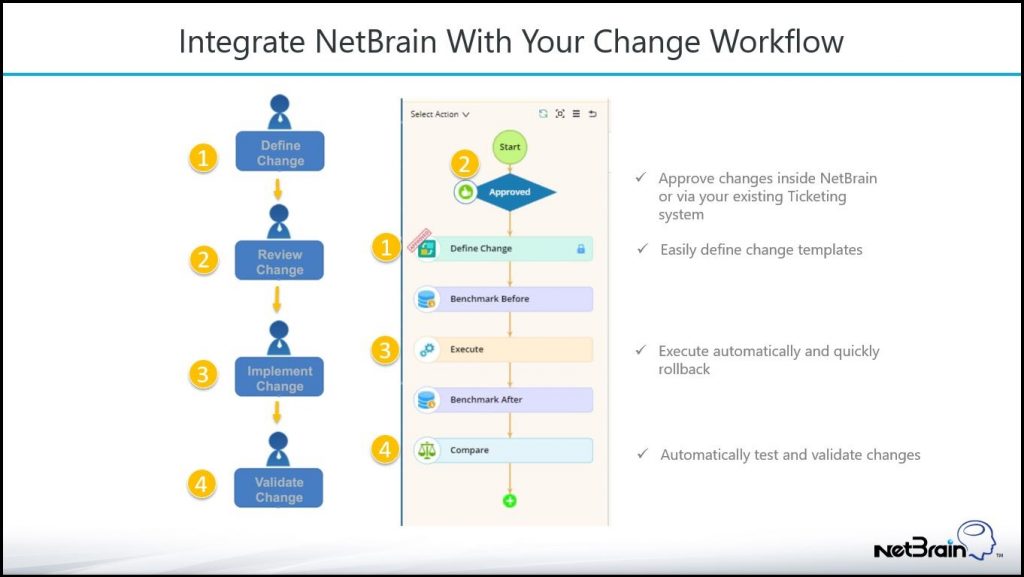

For one, the ability to upload configuration changes on a massive scale would have saved time and effort, but more importantly, the ability to perform benchmark before/after comparisons, as well as insert customized reporting which would have made short work of the entire assignment – Doing so would have enabled us to tell which devices were not in compliance with the golden standards and modify them all at once.

Today, more than ever, federal government IT admins rely on external service providers to carry out a wide range of services using information systems. Protecting confidential information stored in non-federal government IT systems is one of the government’s highest priorities and requires the creation of a uniquely federal cybersecurity protocol.

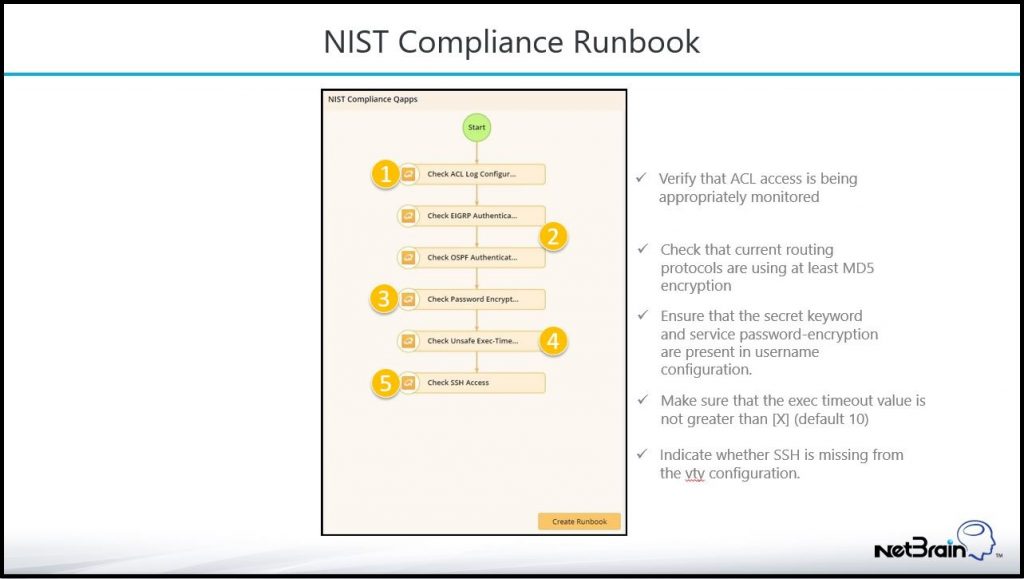

The National Institute of Standards and Technology (NIST) has created a compliance standard for recommended security controls for federal government IT systems. Federal clients endorse this standard, as they encompass security best practice controls across a wide range of industries.

In many instances, complying with NIST guidelines and recommendations also helps federal agencies remain compliant with other regulations, like HIPAA, FISMA, FIPS, etc. NIST is focused primarily on infrastructure security and uses a value-based approach in order to find and protect the most sensitive data.

NetBrain fits in very well here, too. Network Intents (sometimes called Runbooks) are NetBrain’s built-in capabilities for describing and automatically executing expected network operations.

As you can see above, NetBrain performs several data collection tasks to verify that the target network functions within acceptable security parameters. NIST compliance requires the client to control access and encryption protocols to its most sensitive devices, and the larger a network becomes, the more intensive and error-prone the compliance check is.

Compliance-related Intents are especially useful for audits, as they reduce the time spent crawling between devices and clearly pinpoint where the problem areas in your network are.

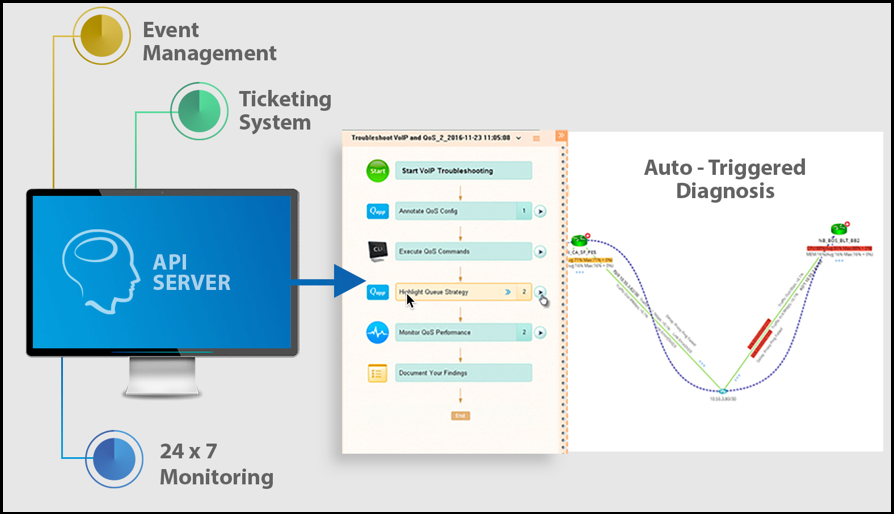

Just-In-Time Automation is an API-triggered NetBrain diagnosis that clients usually program to occur if a monitoring alert or a helpdesk ticket is created.

Within the context of applications on a network, one of the most common uses of Just-In-Time automation is to reduce the Mean Time to Restoration (MTTR) for problems that occur on the network, but given the sensitive nature of many federal government IT systems, another good application for this feature is Security Remediation. An IPS will only tell you where the malicious traffic is located, but NetBrain can provide an outline of the infected area in context to the rest of your network.

Essentially, by applying the API integration into an intrusion prevention platform, NetBrain can be triggered to identify the infected area, calculate the path between the attacker and the victim, and tag this area in a map URL for any security engineer who happens to go on-site.

This action speeds up the general MTTR of the incident, as most of the initial triage work is complete by the time a human sits down to resolve it. Security incidents are as time-sensitive as network outages, if not more so, and having the ability to eliminate the fact-finding and data-collection operations means the organization will be more effective when it counts.

Federal IT teams often struggle with continuity during contractor transitions. Outgoing teams typically leave a few documents outlining changes and security updates, but incoming teams still need to call them back for clarification. Without overlap, the new teams waste time relearning what the previous team already knew, which drives up costs and delays operations.

Here’s how NetBrain improves documentation handoff:

NetBrain meets the unique demands of federal IT compliance requirements by preserving operational knowledge, enforcing policies, and delivering continuity across contract cycles, without compromising security or control.

Staff turnover and shifting contracts do not have to mean lost knowledge or compromised security. NetBrain captures and preserves your network’s operational intelligence in a dynamic, living model, bridging the gap between outgoing and incoming teams. With automated documentation, real-time visibility, and intent-based diagnostics, NetBrain ensures your network stays secure, compliant, and fully understood at every stage.

Ready to future-proof your federal IT operations? Schedule a demo to see NetBrain in action.

Gaining Full Path Visibility in Hybrid Cloud Networks In the modern enterprise, Border Gateway Protocol (BGP) plays a foundational role in connecting distributed networks. It is the routing protocol that...

Your Network Automation Is Holding You Back Network teams across industries are caught in an automation paradox. While 64% of enterprises rely on homegrown scripts and open-source tools like Ansible,...

NetBrain CEO Insights from ISMG Interview at RSAC How Agentic AI decodes network intent to automate diagnosis In the rapidly evolving world of IT infrastructure, preventing recurring network outages...

We use cookies to personalize content and understand your use of the website in order to improve user experience. By using our website you consent to all cookies in accordance with our privacy policy.